Prompt injection has surged to the top of the OWASP GenAI Top 10 for 2025, becoming the defining threat vector for CISOs navigating LLM deployments across banking, healthcare, and enterprise SaaS. These attacks bypass traditional IT defenses not by targeting infrastructure, but by hijacking how LLMs interpret instructions.

In enterprise environments, where copilots, RAG-based workflows, and autonomous agents have access to sensitive systems and records, prompt injection isn't a theoretical risk. It's a regulatory, reputational, and operational threat, one that requires a new approach to runtime controls, red teaming, and AI governance.

What is Prompt Injection?

Prompt injection occurs when attackers insert crafted instructions into LLM input streams, causing unintended behaviors. As highlighted in the Lakera prompt injection guide, these inputs often override internal prompts, leak secrets, or initiate actions.

Two main types of injection dominate:

- Direct injection: User explicitly tells the model to bypass prior instructions.

- Indirect injection: Instructions are hidden inside external content, documents, emails, URLs, or RAG indexes, which are later processed by the model.

.png)

Consequences include:

- System prompt disclosure

- Unauthorized data access or generation

- Inaccurate or manipulated outputs

- Tool misuse, sending emails, initiating workflows, or changing records

A deep dive from FireTail shows that even basic prompt overrides remain effective against many commercial LLM systems.

Enterprise Incidents: From Copilots to Compliance Violations

Prompt injection has breached the enterprise perimeter in multiple real-world incidents:

- EchoLeak / Microsoft 365 Copilot: Malicious instructions embedded in emails were silently parsed by Copilot, causing zero-click data leaks. This case, tracked as CVE-2025-32711, exemplifies the dangers of non-sanitized input streams.

- GitLab Duo Exploit: Attackers inserted injection payloads into merge request comments. As described by CalypsoAI, this exposed private source code and triggered unintentional output delivery.

- Banking RAG Agents: Customer queries laced with hidden prompts tricked chatbots into leaking account data, despite existing RBAC. Promptfoo’s RAG red teaming guide outlines similar test scenarios.

- Healthcare Bots: Virtual assistants generated treatment advice and revealed PHI after ingesting maliciously crafted intake notes, resulting in HIPAA action.

Each case underscores how attacker creativity evolves faster than enterprise AI security maturity.

How Attacks Work: Prompt Injection Vectors in 2025

As detailed in the Pangea threat study, prompt injection is no longer limited to textual commands. Key attack vectors include:

- Instruction Overrides: Classic commands like "Ignore all previous instructions" still bypass logic gates in some models.

- Encoded and Multilingual Payloads: Using invisible characters, unicode, or foreign scripts to slip past filters.

- Hybrid Agentic Exploits: Prompts that cascade through agent workflows, e.g., sending unauthorized files via API, highlighted in hybrid attack research.

- Cross-Modal Attacks: Hidden instructions inside PDFs or images, now interpretable by multimodal models.

- Index Poisoning in RAG: Attackers seed RAG databases with hostile text, manipulating downstream outputs, a growing concern flagged by TechDemocracy.

.png)

EchoLeak: The Zero-Click Breach

One of the most alarming incidents to date is EchoLeak. The vulnerability emerged from how Microsoft 365 Copilot parsed email content via RAG. A malicious actor embedded stealth instructions in a plain-text email. No clicks. No attachments. Just ingestion.

When Copilot interpreted the email, the instructions activated silently, exfiltrating sensitive content. This case has now been cited across OWASP and industry papers as the first “zero-click prompt injection” exploit in production enterprise software.

Watch: EchoLeak Breakdown , How Silent Prompt Injection Works

Add Video

Prompt Injection Readiness Checklist for Enterprises

Best Practices from Field Research

Recent research from firms like Metomic and Promptfoo reinforce several best practices that CISOs should operationalize:

- Segregate inputs: Never combine untrusted and internal prompts without clear boundaries.

- Reinforce system prompts: Re-assert boundaries and policies across session lifespan, not just at initiation.

- Inject meta-prompts: Inform LLMs explicitly of forbidden topics and role scope.

- Red-team constantly: Build attack scenarios into CI/CD pipelines. Use adversarial testing tools like Promptfoo.

- Sanitize outputs: Use rule-based scrubbing for hallucinated URLs, PII, or policy violations.

- Log & monitor sessions: Enable taint tracking and behavioral anomaly detection for post-event audits.

The Google security team recommends embedding runtime policies into orchestration layers, not just system prompts.

Conclusion

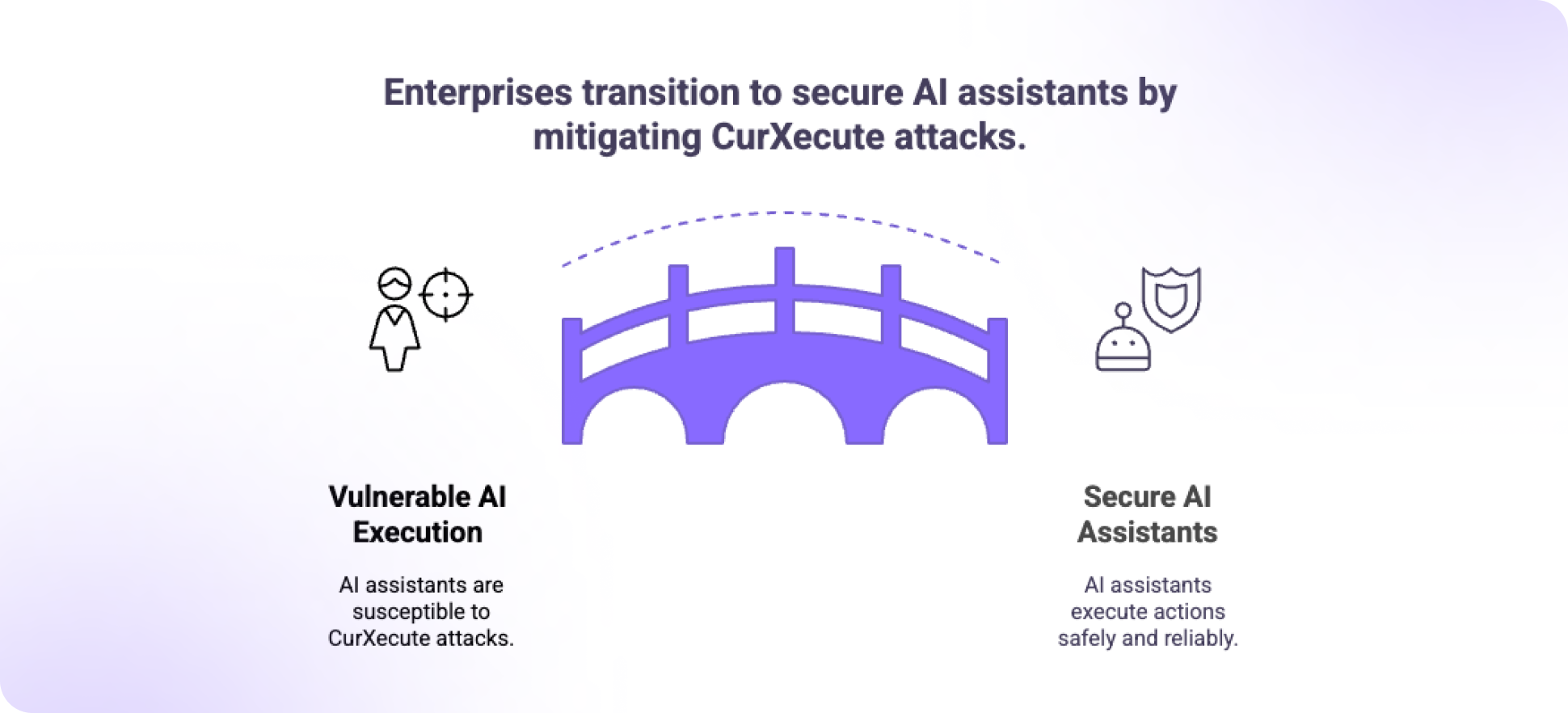

Prompt injection isn’t just an LLM problem, it’s a new class of exploit for the GenAI era. Enterprises must now treat prompt streams with the same caution as API calls or database writes. The most resilient organizations will be those that build layered security into the heart of their AI infrastructure.

As research from OWASP and CalypsoAI confirms, surviving in the era of agentic AI means embedding governance, not just trusting guardrails.

.png)