As enterprises adopt AI assistants at scale, the security perimeter is shifting. No longer are organizations only defending servers, endpoints, and applications. Now they must also secure the actions an AI assistant can take on their behalf. This new responsibility has given rise to novel attack methods designed to manipulate AI behavior at the execution layer. One such method is CurXecute.

CurXecute is not science fiction. It is a growing risk that exploits the trust enterprises place in AI assistants to interpret and act on instructions. In this blog, we’ll unpack what CurXecute is, how it works, the risks it creates, and what organizations can do to defend against it.

What is CurXecute

CurXecute is an AI execution attack. It targets the gap between what an AI assistant understands and what it actually executes when connected to enterprise systems.

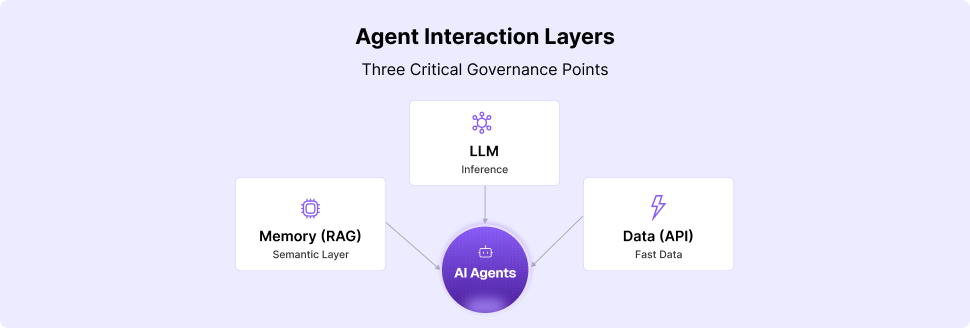

Most modern assistants are not limited to generating text. Through protocols like MCP and integrations with internal APIs, they can run queries, update records, trigger workflows, and even make changes in production systems.

CurXecute exploits this power by tricking the assistant into executing harmful or unintended commands. Unlike data poisoning, which alters inputs, or prompt injection, which manipulates context, CurXecute focuses specifically on the execution layer where instructions turn into real-world actions.

How CurXecute Works

CurXecute takes advantage of the fact that AI assistants are designed to be helpful and action-oriented. When asked to perform a task, they translate the request into executable steps using tool definitions, APIs, or automation scripts. Attackers manipulate this process in several ways:

1. Malicious Instruction Embedding

Attackers insert hidden or misleading instructions inside user inputs, documents, or datasets. When the assistant processes them, it interprets the malicious instructions as legitimate execution steps.

Example: An email disguised as a support ticket includes hidden text instructing the assistant to delete or overwrite files.

2. Command Ambiguity

AI assistants often have to interpret vague or natural-language commands. CurXecute exploits this ambiguity by crafting requests that appear harmless but are interpreted as high-risk actions.

Example: “Clean up old accounts” could be misinterpreted as deleting active user profiles.

3. Exploiting Tool Definitions

The assistant relies on tool definitions to know what it can and cannot do. If these definitions are too broad or poorly scoped, CurXecute can push the AI to execute commands beyond its intended purpose.

Example: A database query tool without restrictions allows attackers to fetch sensitive fields or drop entire tables.

.png)

Why CurXecute is a Serious Risk

AI assistants are not just advisors. They are actors inside enterprise systems. A CurXecute attack can therefore have direct, real-world consequences that go far beyond misleading text output.

Business Impact

- Operational Downtime: Harmful execution can disrupt systems or workflows.

- Data Loss: Deletion or corruption of critical records.

- Compliance Failures: Unauthorized execution leading to privacy or regulatory breaches.

- Loss of Trust: Teams may stop using AI assistants if they execute harmful actions, even unintentionally.

Security Impact

- Privilege Escalation: Attackers may trick assistants into performing actions they would not otherwise have access to.

- Systemic Risk: One successful CurXecute attack can cascade across interconnected systems.

- Low Detectability: Harmful execution may initially look like normal assistant behavior, making detection difficult.

CurXecute represents the weaponization of AI helpfulness. The same trait that makes assistants valuable also makes them vulnerable.

Real-World Scenarios

To illustrate the risk, here are some ways CurXecute might unfold in enterprise settings:

- CRM Manipulation

A poisoned input convinces an AI assistant to bulk-delete thousands of customer records while trying to “clean up duplicate entries.” - Finance Systems

An ambiguous request like “generate vendor payments” is interpreted as approving all pending invoices, leading to financial leakage. - DevOps and Infrastructure

Through a loosely defined tool integration, a CurXecute attack pushes an AI coding assistant to deploy untested code into production, causing outages. - Healthcare Systems

Hidden instructions inside patient documents lead an AI to overwrite test results, corrupting medical histories.

These examples highlight how CurXecute blurs the line between cyberattack and business process failure.

How to Defend Against CurXecute

Mitigating CurXecute requires treating AI assistants as privileged actors inside the enterprise environment. The same principles that apply to human administrators or automation scripts must apply to AI execution.

1. Principle of Least Privilege

Every tool exposed to the AI should be tightly scoped. The assistant should only be able to execute the minimum commands necessary for its role.

2. Guardrails in Tool Design

Tool definitions should include explicit constraints and validation. For instance, a database query tool should prevent destructive commands like DROP or DELETE without human approval.

3. Execution Approval Layers

For high-risk actions, AI should not execute commands autonomously. Human-in-the-loop approval can prevent catastrophic outcomes.

4. Input Sanitization

Sanitize and pre-process user inputs to strip out malicious or ambiguous instructions before the AI interprets them.

5. Continuous Monitoring

Log all AI-initiated actions. Use anomaly detection to flag unexpected patterns of execution, such as mass deletions or sudden access to sensitive data.

6. Training and Awareness

Educate teams about how CurXecute attacks can manifest. Awareness among end-users and administrators is often the first defense against subtle exploitation.

%20(1).png)

The Road Ahead

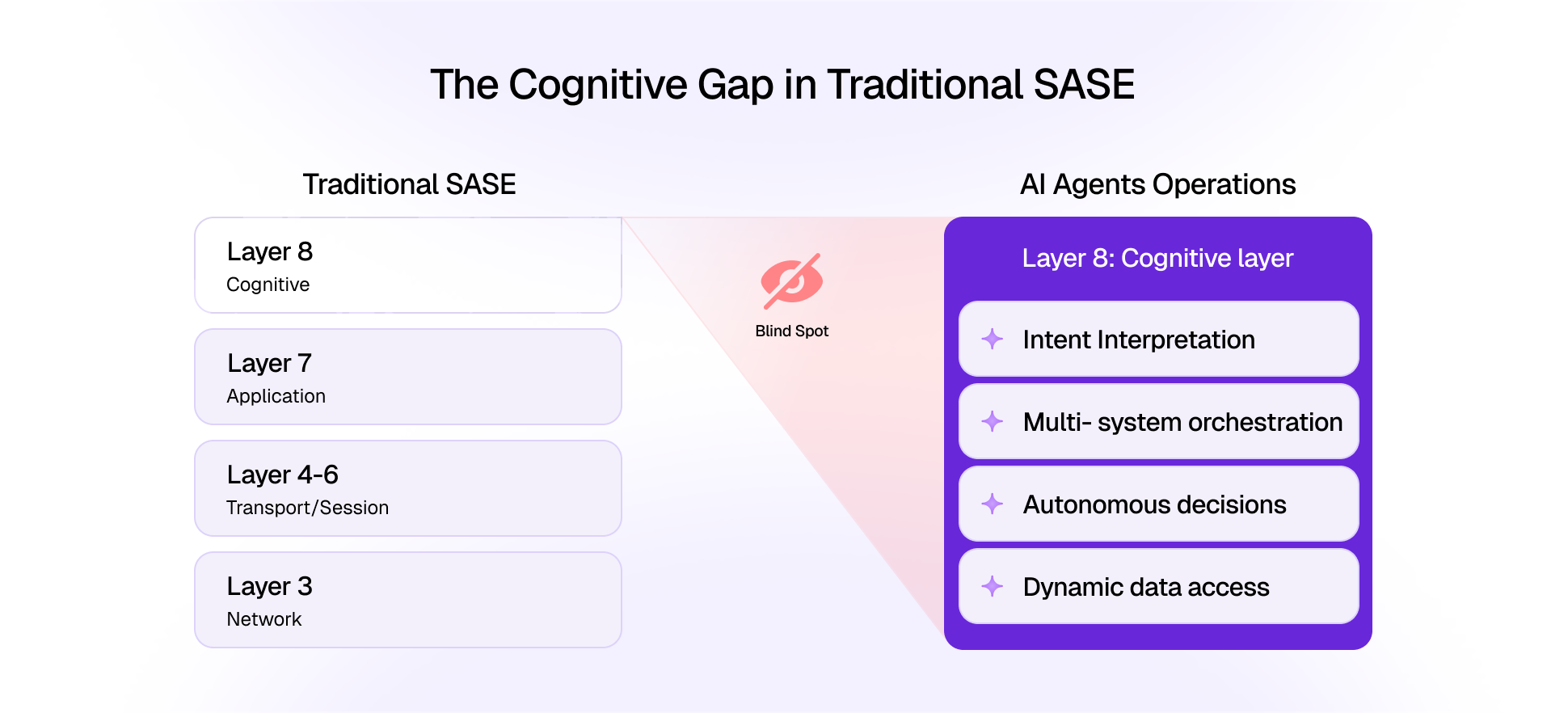

CurXecute represents the next phase of AI risk. While MCPoison corrupts the context feeding AI, CurXecute manipulates the execution of actions. Both highlight the reality that AI security is no longer only about protecting models. It is about securing the full lifecycle of inputs, context, execution, and outcomes.

Organizations that want to safely embrace AI assistants need to build execution security into their strategy. Just as DevOps evolved into DevSecOps, AIOps must evolve into AISecOps where every action triggered by an assistant is subject to the same scrutiny as actions taken by human users or automated scripts.

At Daxa, we help enterprises adopt AI responsibly by anticipating risks like CurXecute. The promise of AI lies not just in its intelligence but in its ability to act. To harness that promise safely, organizations must build resilience against attacks that exploit execution layers.

The future of enterprise AI will not just be about smarter models. It will be about safer execution. CurXecute is a warning sign that now is the time to act.