Going to #RSAC2025?

Read More

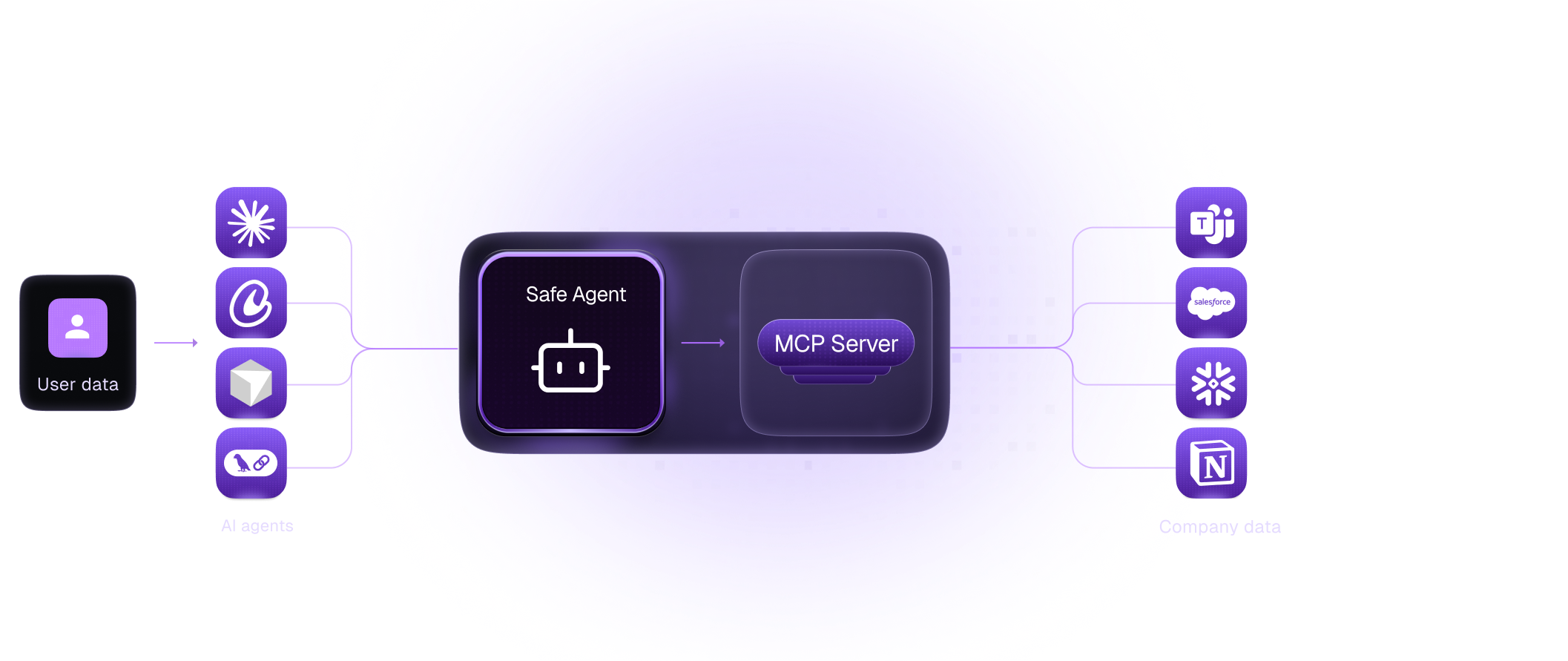

Daxa.ai Launches Proxima: The Secure AI Knowledge Engine for Regulated Industries

Read More

What’s the Biggest Blindspot in AI Security Today? Insights from Inflection AI’s Panel

Read More

DAXA at JPMorganChase's Technology Innovation Forum

Read More

Daxa Recognized in the 2025 Gartner® Market Guide for AI Trust, Risk and Security Management

Read More