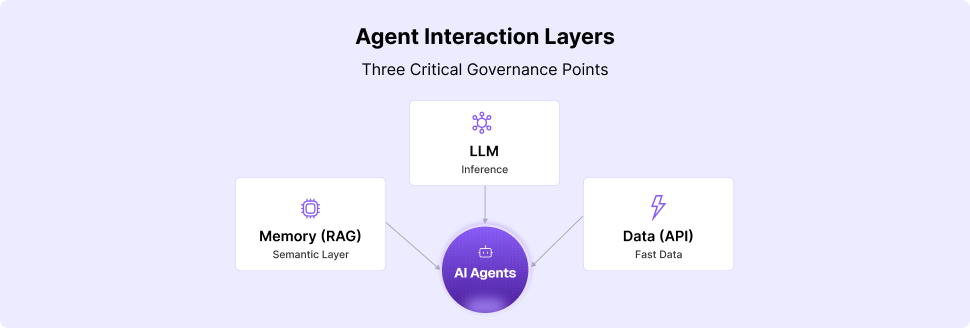

Artificial Intelligence is no longer an experiment in most organizations. It is being woven into everyday workstreams, from writing and summarizing content to automating engineering and customer support. At the heart of this adoption is the ability of AI assistants to integrate with enterprise systems, tools, and data through the Model Context Protocol (MCP).

This integration gives AI its superpower. Instead of just generating text in isolation, it can fetch real data, call APIs, query databases, and even trigger automation pipelines. But with that power comes a new class of threats. One of the most concerning is MCPoison: a method of compromising AI workflows by corrupting the very context and tool definitions that guide an assistant’s actions.

This blog takes a closer look at what MCPoison is, how it works, why it matters for enterprises, and how leaders can respond.

What is MCPoison

MCPoison is an attack that targets the Model Context Protocol. MCP is the layer that bridges an AI model with external tools and information. For example, an AI assistant in a financial services company might use MCP to:

- Query a customer database

- Fetch the latest compliance policy

- Trigger a workflow in Salesforce

- Summarize data from an internal report

The model itself does not have direct access to these systems. Instead, MCP defines the rules and endpoints it can interact with.

In an MCPoison attack, malicious actors tamper with these definitions or the data flowing through them. The goal is not to break the model but to subtly alter its behavior. This can lead to corrupted outputs, unauthorized actions, or trust erosion in the assistant itself.

How MCPoison Works

To understand MCPoison, think of it like a supply chain attack for AI. Instead of directly hacking the model, attackers exploit the ecosystem around it. Common methods include:

1. Poisoned Data Feeds

If an AI relies on a connected knowledge base or API, attackers may insert misleading or malicious information into that feed. The assistant then consumes poisoned data and makes flawed recommendations.

Example: A market research feed is altered to show incorrect pricing trends, leading the AI to generate bad strategic advice.

2. API Manipulation

MCP uses tool definitions to describe what actions an AI can take. Attackers may tamper with these definitions so the AI unknowingly executes harmful commands.

Example: A harmless-looking “fetch report” tool is modified to also expose sensitive customer details.

3. Instruction Injection

Malicious prompts or hidden instructions are embedded inside enterprise documents, emails, or tickets that the AI processes. When the assistant reads them, it executes unintended actions.

Example: A support ticket contains hidden text instructing the AI to share confidential database entries.

%20(1).png)

Why MCPoison is Dangerous for Enterprises

AI assistants are valuable because they can act, not just think. They can move data between systems, trigger processes, and influence decision-making. If MCP is poisoned, those actions can quickly become liabilities.

Business Impact

- Corrupted Decisions: Leaders may rely on AI outputs that are based on poisoned context, resulting in poor strategic calls.

- Operational Disruption: Automated workflows can be derailed or misdirected, slowing down teams.

- Compliance Risks: Unauthorized access or data exposure can trigger regulatory penalties.

- Erosion of Trust: Employees may lose confidence in AI tools if they begin producing suspicious results.

Security Impact

- Lateral Movement: Attackers may use MCP to pivot across enterprise systems.

- Stealth: MCPoison does not always create obvious errors. The corruption may be subtle, making detection difficult.

- Scalability of Damage: Once poisoned, an AI assistant can replicate flawed outputs at scale, multiplying the harm.

In many ways, MCPoison combines the worst of data poisoning, prompt injection, and supply chain exploitation into one attack surface.

Real-World Scenarios

To make the risk more tangible, here are a few scenarios of how MCPoison might play out inside enterprises:

- Financial Services

A poisoned market data feed convinces an AI-powered assistant to recommend faulty investment strategies, exposing firms to reputational and monetary loss. - Healthcare

Instruction injection in medical records causes an AI assistant to misinterpret patient histories, increasing the risk of incorrect treatment recommendations. - Software Development

A manipulated MCP tool definition allows an AI coding assistant to write code that includes hidden vulnerabilities, opening the door for further exploits.

These are not futuristic science fiction scenarios. They build on attack methods already observed in prompt injection and data poisoning research. MCPoison simply extends the risk into the orchestration layer that ties AI to enterprise systems.

Protecting Against MCPoison

The good news is that enterprises do not need to abandon MCP or AI assistants. The key is to treat MCP as part of the security perimeter and design safeguards around it.

Here are five practical steps:

1. Audit Tool Definitions

Every tool exposed to MCP should be reviewed for access scope, permissions, and side effects. Limit what an AI can do to the minimum necessary.

2. Validate Data Sources

Build integrity checks into data feeds connected to MCP. If the assistant consumes poisoned inputs, the outputs will be compromised no matter how advanced the model is.

3. Context Filtering

Use middleware or monitoring layers that sanitize inputs before they reach the AI. This can help strip out hidden instructions or malicious payloads.

4. Continuous Monitoring

Treat MCP pipelines like a software supply chain. Regular scans, anomaly detection, and detailed logging can catch subtle corruption before it spreads.

5. User Training

Educate teams on prompt injection, poisoning risks, and suspicious behaviors. Awareness often makes the difference in early detection.

.png)

The Road Ahead

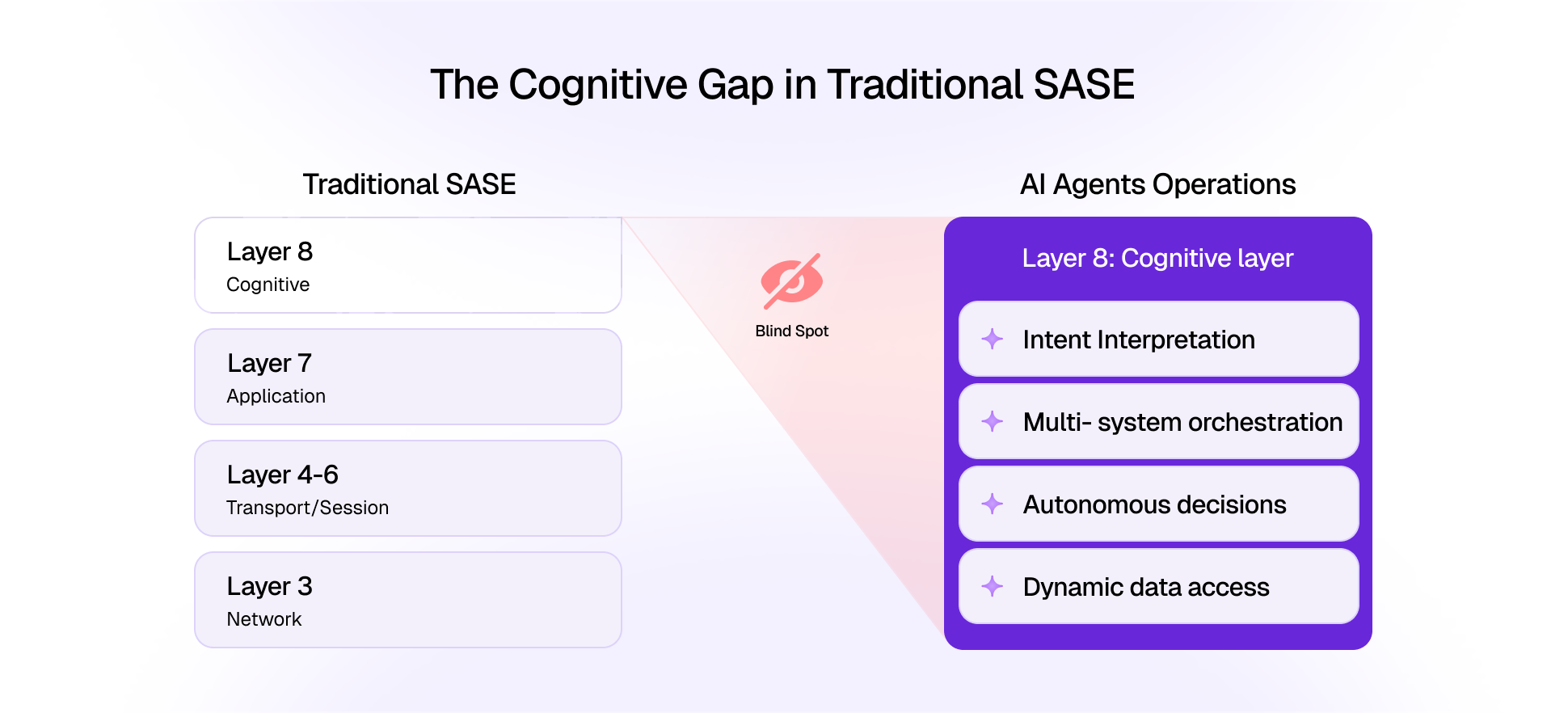

MCPoison is not just another security buzzword. It represents a shift in where enterprises must focus their defenses. For years, AI security discussions centered on the models themselves. But the real risk increasingly lies in the ecosystem around the model: the data, tools, and protocols that give AI its usefulness.

Forward-thinking organizations will treat MCP security with the same seriousness as network, cloud, or application security. Just as DevSecOps became a standard for software, MCP-secure AI operations will become a baseline expectation.

At Daxa, we believe that AI assistants can give enterprises a competitive advantage only if they are deployed responsibly. Recognizing threats like MCPoison is the first step. The next is building resilience at every layer of the stack, so organizations can harness AI safely and confidently.