MCP Security: Securing Agentic AI with the Model Context Protocol

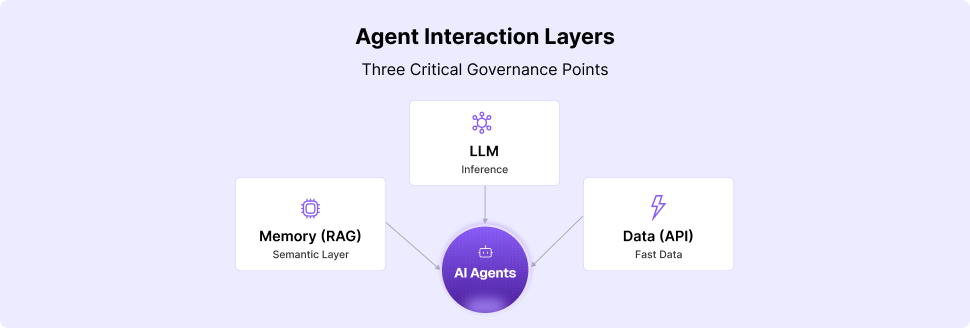

The Model Context Protocol (MCP) represents a paradigm shift in how AI agents interact with enterprise systems and external data sources. As an open-source abstraction layer, MCP enables open, real-time interactions between large language models and complex enterprise environments, facilitating sophisticated multi-step workflows that were previously impossible to implement safely.

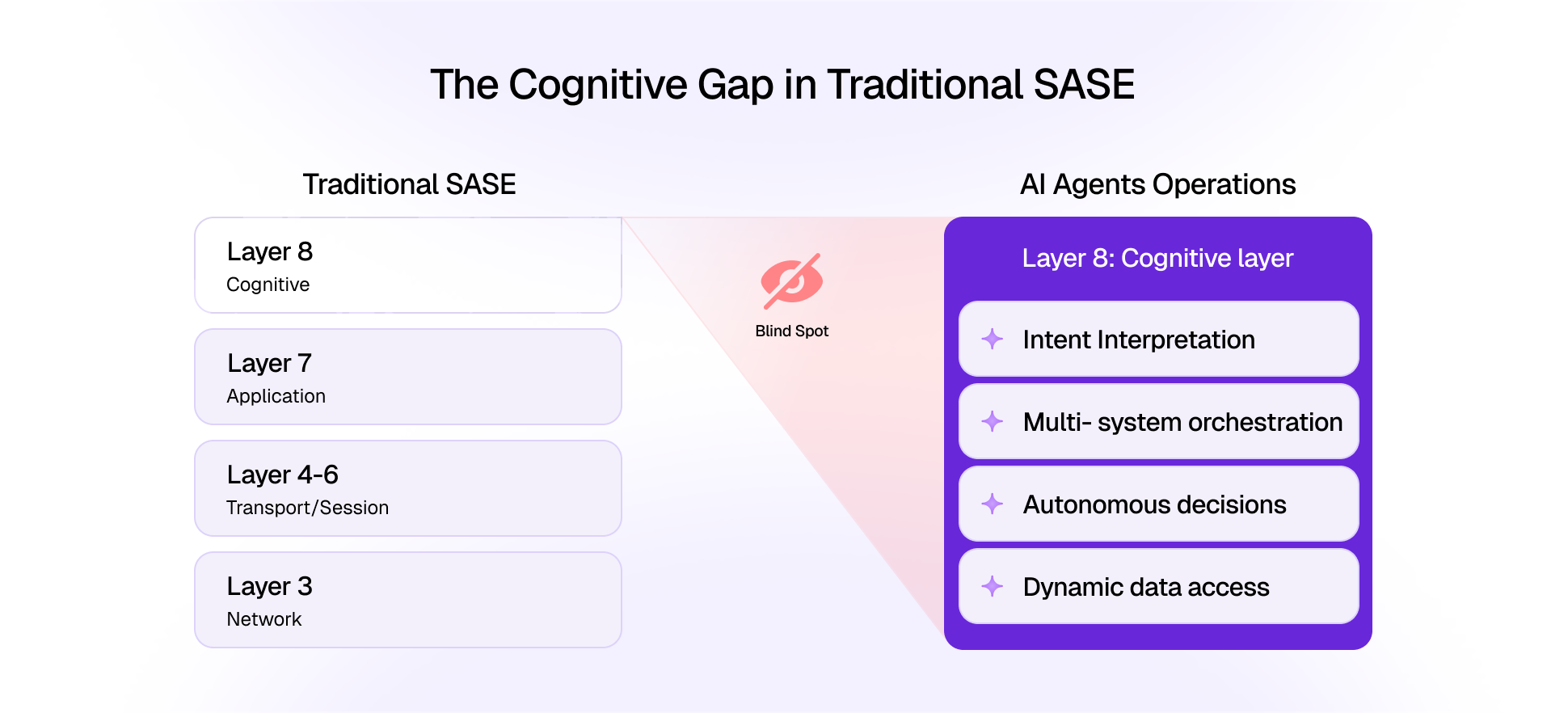

MCP security becomes critical as AI agents gain increasing autonomy and access to sensitive business systems. Unlike traditional API security, MCP must address unique challenges including agent identity validation, contextual permission enforcement, and real-time threat detection across dynamic AI workflows.

This guide explores the essential security challenges facing MCP implementations and provides actionable best practices for securing agentic AI workflows while maintaining the productivity benefits that make AI agents valuable to organizations.

What is the Model Context Protocol (MCP)?

The Model Context Protocol serves as a standardized communication framework that enables large language models to securely interact with external systems, databases, and services in real-time. Unlike traditional API integrations, MCP provides a rich contextual layer that maintains state, permissions, and metadata throughout complex multi-step agentic AI workflows.

Key MCP capabilities include:

- Real-time context sharing between AI agents and external systems

- Structured metadata management for permissions and access control

- Bidirectional communication enabling adaptive AI workflows

- Built-in audit logging and compliance tracking

- Standardized token-based authentication and authorization

The protocol's design addresses unique requirements of AI agent interactions, where traditional request-response patterns fall short. MCP enables agents to maintain context across multiple interactions, request additional permissions dynamically, and adapt behavior based on real-time feedback from connected systems.

Security Challenges Unique to MCP

Identity and Intent Validation

AI agents operating through MCP present novel challenges for AI agent security verification. Unlike human users with predictable behavior patterns, AI agents can generate requests at superhuman speeds with varying sophistication levels. Organizations must validate not just agent identity, but also the legitimacy of agent intentions and appropriateness of requested actions.

Token Spoofing and Cryptographic Vulnerabilities

Key token security risks include:

- Forged MCP tokens gaining unauthorized system access

- Replay attacks using captured authentication tokens

- Weaknesses in token generation and validation processes

- Insufficient cryptographic protection during token transmission

- Token theft through compromised communication channels

Prompt Injection and Context Manipulation

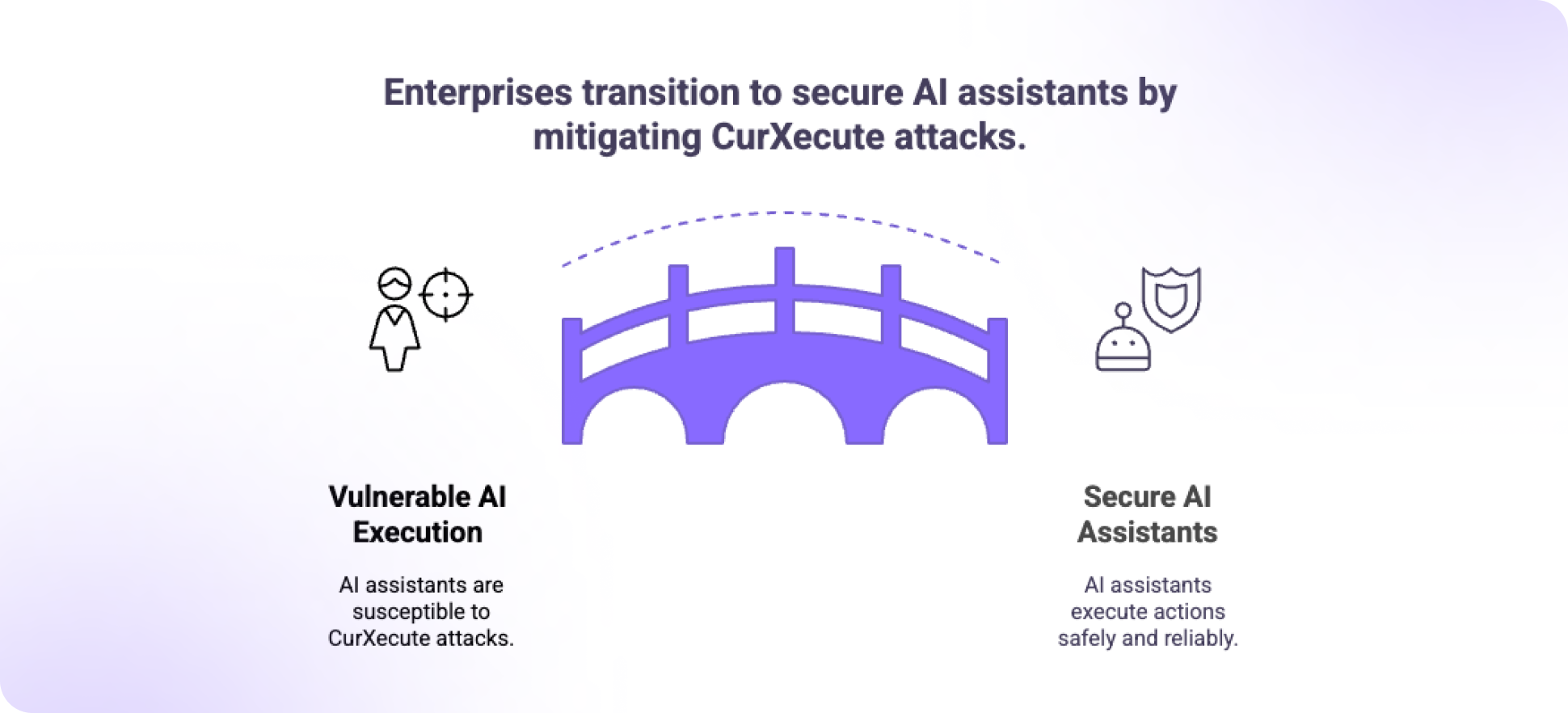

Prompt injection attacks represent a significant threat to MCP-enabled AI systems. Attackers craft malicious inputs designed to manipulate AI agent behavior, potentially causing agents to execute unauthorized actions or bypass security controls.

Context manipulation attacks exploit MCP's rich metadata capabilities by injecting false contextual information. Attackers might manipulate metadata to convince AI agents they have different permissions or operate in different security contexts, leading to privilege escalation or unauthorized data access.

Server-Side Tool Poisoning

Server-side tool poisoning occurs when attackers compromise tools and services that AI agents interact with through MCP. Since agents rely on external tools to perform tasks, compromised tools can provide false information, execute malicious actions, or manipulate agent behavior subtly.

Agent Isolation Failures

Multi-agent systems must maintain strict isolation between different agents to prevent unauthorized information sharing or privilege escalation. Security challenges include:

- Breakdown of security boundaries between agents

- Unauthorized cross-agent information sharing

- Privilege escalation through compromised agents

- Coordinated attacks across multiple agent systems

How MCP Enhances Security Posture

MCP's structured approach provides several security advantages over traditional API-based integrations. The protocol's built-in metadata system enables granular permission tracking and real-time access control decisions based on current context and agent state.

Security enhancements include:

- Granular permission enforcement at the protocol level

- Comprehensive audit logging of all agent interactions

- Real-time context validation and security policy enforcement

- Bidirectional communication enabling adaptive security responses

- Standardized authentication and authorization frameworks

The protocol's bidirectional communication capabilities enable adaptive security responses where systems can dynamically adjust permissions or request additional validation based on agent behavior patterns.

Best Practices for Securing MCP Implementations

Role-Based Access Control and Sandboxing

Deploy comprehensive role-based access control systems that define specific permissions for each AI agent based on intended function and organizational role. Agent permissions should follow least privilege principles, granting only minimum access necessary for operations.

Agent sandboxing provides additional security by isolating execution environments and limiting system resource access beyond designated scope.

Input Validation and Sanitization

Implement rigorous input validation to prevent prompt injection attacks and input-based exploits. All data flowing through MCP channels should be validated against expected formats and sanitized to remove malicious content.

Critical validation controls:

- Schema validation for all MCP messages and metadata

- Content filtering to detect and block malicious prompts

- Context validation to ensure metadata integrity

- Rate limiting to prevent automated attack attempts

- Anomaly detection for unusual input patterns

Runtime Behavioral Monitoring

Deploy runtime AI security monitoring systems that continuously analyze agent behavior for compromise signs or malicious activity. These systems should establish baseline behavior patterns and alert security teams when significant deviations occur.

Machine learning-based anomaly detection can identify subtle behavior changes indicating compromise or manipulation, including monitoring request patterns, resource usage, and interaction frequencies.

Comprehensive Audit Logging

Maintain detailed audit logs of all MCP interactions, including successful operations, failed attempts, and security events. Logs should capture sufficient detail for forensic analysis and compliance reporting while protecting sensitive information.

Logging requirements:

- Complete request and response logging with timestamps

- Agent identity and permission context for each interaction

- Security event logging including failed authentication attempts

- Immutable log storage with cryptographic integrity protection

Complementary Security Technologies

AI-Powered Detection Engines

Deploy specialized detection engines that fingerprint AI agents, monitor intent patterns, and identify malicious activity in real-time. These systems use machine learning to understand normal agent behavior and detect sophisticated attacks that traditional security tools might miss.

Multi-Layered Security Architecture

Implement multi-layered security approaches combining MCP protocol-level controls with runtime enforcement mechanisms. This defense-in-depth strategy ensures security failures at one layer don't compromise the entire system.

Integration with existing security infrastructure including SIEM systems, identity providers, and network security tools creates comprehensive protection addressing threats at multiple levels.

Future Trends in MCP Security

Zero-Trust AI Security Models

Zero-trust security models for AI agents represent a fundamental shift requiring continuous verification and validation of all interactions. These models assume no implicit trust, implementing continuous authentication, real-time permission validation, and dynamic security policy enforcement.

Privacy-Preserving AI and Encrypted Communication

Advances in encrypted communication and privacy-preserving AI enable more secure MCP implementations. Technologies like homomorphic encryption allow AI agents to process sensitive data without exposing it to potentially compromised systems.

Multi-Agent Collaboration Security

Future security developments include:

- Securing complex multi-agent collaboration pipelines

- Protection against toxic agent flows and coordinated attacks

- Dynamic security policy adjustment based on workflow patterns

- Advanced threat intelligence for AI-specific attack vectors

Standardization and Community Security

The MCP ecosystem evolves toward standardized security frameworks and community-driven security tooling, including common vulnerability databases, security testing frameworks, and shared threat intelligence for MCP-enabled AI systems.

Conclusion

The Model Context Protocol represents a transformative approach to securing agentic AI workflows, providing the structured framework necessary for safe, scalable AI agent deployments. MCP security implementations must address unique challenges including agent identity validation, real-time permission enforcement, and sophisticated attack vectors.

Organizations implementing MCP-enabled AI systems must adopt comprehensive security approaches combining protocol-level controls with runtime monitoring, behavioral analysis, and multi-layered defense strategies. The security benefits of MCP's structured approach become apparent when properly implemented alongside complementary security technologies.

As AI agents become increasingly autonomous, robust MCP security implementations become essential. Organizations that proactively address these security challenges while adopting MCP will unlock AI's full potential safely and securely.

Key takeaways for secure MCP implementation:

- Implement cryptographic token validation and comprehensive access controls

- Deploy runtime behavioral monitoring and anomaly detection systems

- Maintain detailed audit logging for compliance and forensic analysis

- Adopt zero-trust security models for continuous verification

- Integrate AI-powered detection engines for sophisticated threat identification

Ready to secure your MCP implementation? Start by assessing your current AI security posture, implementing cryptographic token validation, and establishing comprehensive monitoring systems. The investment in proper MCP security enables safe, scalable AI agent deployments that drive business value while protecting organizational assets.

Explore comprehensive resources on AI security and MCP implementation guides to stay ahead of evolving AI security challenges.

.png)